I was prototyping a small app in xkit and wanted to document this useful tool. When I first saw this launch on HackerNews, I couldn’t quite understand what the purpose was. But now that I’ve spent a bit of time playing with it, I understand it a bit more.

Suppose you are writing a recipe management SaaS and realize that you want to integrate with some other services. Perhaps you want to be able to export the steps of a recipe to a Trello board, or to a Google doc, or to a PDF.

These are all services available on the internet with an API which will allow end users to give your application access to their accounts. This lets you publish to each user’s Google docs account or Trello board.

(I’m not as familiar with services offering PDF generation functionality, but a quick search turns up some options, including some that you can self host.)

There’s a fair bit of hoop jumping in terms of setting up API keys and OAuth consent screens, however.

And this is the problem that xkit solves. If they’ve already written the connection (here’s a list), it is quite simple to add the ability for a user to connect to the service. With no previous experience, I was able to connect to Trello in about an hour. The user experience of connecting the external SaaS application is really smooth and far better than something I could whip up quickly.

If they haven’t written a connector, I don’t believe you can write one yourself. For example, for that PDF service, you’d need to contact the xkit folks and ask them to add one.

This is different than, say, Zapier, because it’s operating at a different level. Zapier is excellent (and has been for years) at letting users connect their apps. But xkit lets you let your users connect apps, basically letting you build a mini Zapier (in terms of connectivity, not functionality).

You can also host your own app catalog if you want to. I didn’t get into this too much, though, so it’s unclear what the benefits of that are.

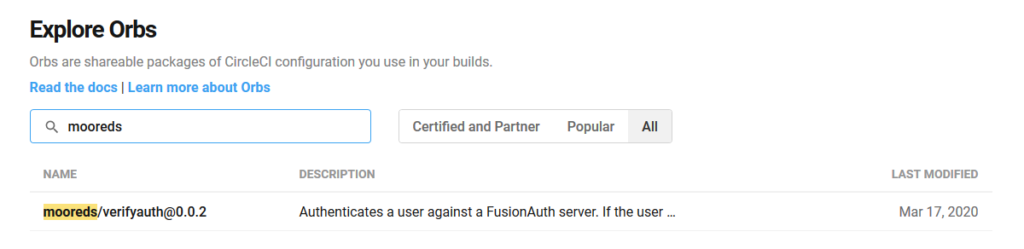

They provide a user data store out of the box, but also integrate with a number of other providers (including FusionAuth). This means you can leverage your existing auth solution and still get the easy integration with other third party APIs.

Their pricing seems reasonable, given what they take off your plate.

Nothing’s perfect, however. I found a few documentation bugs, which I let them know about (they host their docs on readme.com and I found the suggestion process delightful). When I tried to sign up, the service was down, but a quick Tweet exchange resolved the issue within 30 minutes.

It is bizarre to me as an authentication focused company that they don’t have a “forgot password” link on their login pages. The documentation is javascript heavy, with nary a mention of other languages, but that’s understandable as they’re just starting out. It’s also strangely video heavy, which I found a bit distracting; that, however, could just be my learning style.

All in all, if you are looking to integrate third party APIs which require OAuth interactions on the part of your users, you’d be well served to take a look at xkit.

Based on this

Based on this  A few years ago I was working on an API that my client was going to make available to some of their clients. I used

A few years ago I was working on an API that my client was going to make available to some of their clients. I used  I attended the

I attended the