GitHub Workflows are automated jobs that can be triggered by various events against a GitHub repository. They are pretty awesome.

GitHub Actions are a way to encapsulate configuration and functionality in a way that can be easily reused in GitHub Workflows.

I was thinking it’d be fun to create some GitHub Actions (yes, I’m the life of the party), so I sat down a few mornings ago to do this. I was shocked at how easy it was.

I followed a few lines of this tutorial to create a workflow. Then I created an action by following this tutorial. Finally, I edited my workflow to use the new action. That was it.

It was amazingly simple and took me about 30 minutes. I ran into one unrelated issue (to set the executable bit on a shell script in windows, I had to modify the shell script contents in order to ensure the change was sent to the remote repo).

If you take a look, you’ll see these are both toy repositories, to be sure. However, the ability to write jobs which will be executed on a git push, pull request or other events is great and removes toil. Being able to extract common functionality to an action is even better. Finally, the ability to share the action publicly by adding it to the GitHub marketplace is fantastic.

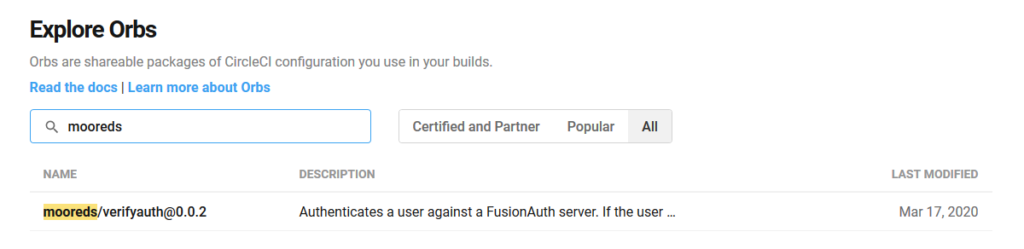

I’ve liked CircleCI for a long time, but if I were them I’d be worried.

One issue I found is that the testing/release cycle is pretty tedious (I’ve mentioned that action debugging to be an issue for a while).

While I was troubleshooting my executable bit error, I had to do the following every time I wanted to test a change:

- make a change in the action repository

- create a new tag

- push it to the remote

- switch to the workflow repository

- bump the action version

- push to the remote

- wait for the workflow to complete

Not horrific, but pretty tedious. I don’t know if there are other options such as local deployment which would reduce that cycle, but that would be swell.

Other than that, 10 out of 10, would write more actions.

A server on which I am working runs this command:

A server on which I am working runs this command: