I’m a big fan of automating all the things and I also believe in the DRY principle. I’ve been using CircleCI for years and noticed that they had added a way to abstract away some repeated configuration called orbs. I recently built one and wanted to share my experience.

If you are thinking about building an orb, first take a look at the list of existing orbs. After all, it’s better to reuse code someone else will maintain if it does what you need.

In my case, I wanted to explore building an orb. I dreamed up a use case for which no one else had written an orb. The situation is that you store user data in FusionAuth. Each time a build runs, you want to verify that the user running the build is active andvalid before continuing the build. If the user can’t authenticate, fail the build.

Set up the authentication server

I set up a FusionAuth server using their instructions on EC2. It can’t be on localhost because CircleCI needs to communicate with it during the build. The server didn’t run well on a t2.micro size so I ended up springing for a t2.large, where it worked fine. I also had some difficulty installing mysql on the Amazon Linux AMI, but this SO answer helped me out. I added a FusionAuth application and user via the admin panel. I also got an API key. I limited the API key drastically; it could only post to the login endpoint. I tested access with curl like so (ALL_CAPS strings are placeholders you’d want to replace with real values):

curl -s -o /dev/null -w "%{http_code}" -XPOST -H 'Authorization: API_KEY' \

-H 'Content-Type: application/json' \

-d '{ "loginId": "USERNAME", "password": "PASSWORD", "applicationId": "APPLICATION_ID" }' \

http://FUSION_AUTH_SERVER_HOSTNAME:9011/api/login|grep 202 > /dev/null

FusionAuth returns a 404 status code if the user isn’t authenticated successfully (they don’t exist or have an incorrect password) and 202 if the user logs in successfully (more API information here). Note that this login process may skew some of the reporting (around DAUs, for example) so you’ll want to create a separate application just for CI/CD.

The curl + grep statement above exits with a value of 0 if the user successfully authenticates and with a value of 1 if authentication fails. The former will allow the build to continue and the latter will stop it.

The check I want to run worked. Now I just needed to build and publish the orb.

Building the orb

An orb is reusable CircleCI configuration. You have three kinds of configuration you can re-use:

- jobs: a normal CircleCI job, but you can also pass in parameters. A great option if you have a job you want to use across different projects; something like ‘run this formatting tool’.

- executors: an environment in which to execute code (docker container, vm, etc).

- commands: a set of steps that can be reused. It is lower level than a job and can be used across different jobs.

I chose to create a single command. I also created a job to run while I was developing (I added it as a project in CircleCI). You can see the full source code here.

Here are the interesting bits where I define the command to be shared (ah, yaml):

commands:

verifyauth:

parameters:

username:

type: string

default: "user"

description: "FusionAuth username to try to validate"

applicationid:

type: string

default: "appid"

description: "FusionAuth application id"

hostbaseurl:

type: string

default: "http://ec2-52-35-2-20.us-west-2.compute.amazonaws.com:9011/"

description: "FusionAuth host base url"

password_env_var_name:

type: env_var_name

default: BUILDER_PASS

description: "The user's FusionAuth password is stored in this environment variable"

fusionauth_api_key_env_var_name:

type: env_var_name

default: FUSION_AUTH_API

description: "The FusionAuth API key is stored in this environment variable"

steps:

- run: |

curl -s -o /dev/null -w "%{http_code}" -XPOST -H "Authorization: ${<< parameters.fusionauth_api_key_env_var_name >>}" -H 'Content-Type: application/json' -d '{ "loginId": "<< parameters.username >>", "password": "'${<< parameters.password_env_var_name >>}'", "applicationId": "<< parameters.applicationid >>" }' << parameters.hostbaseurl >>api/login |grep 202 > /dev/null

- run: |

echo User authorized

The command verifyauth takes parameters. These have defaults and descriptions. Anything that you wouldn’t mind seeing checked into a source code repository can be passed as a parameter. You then call the command in your job and pass parameters as needed (we’ll see that below).

However, there are sometimes secrets which need to be stored as environment variables (in the project or the context): API keys or passwords, for example. However, I still wanted to make them configurable by whoever uses the orb. Enter the env_var_name parameter type. This type lets the user specify the name of the environment variable. If I set the password_env_var_name to AUTH_CHECK_PASS, then I need to make sure there is an AUTH_CHECK_PASS environment variable set somewhere in my project containing the password with which we’ll authenticate against FusionAuth. This lets the orb be both configurable and secure.

Finally, you can see that the first step of the command is posting login data to the authentication server. Again, if we see anything other than 202 we fail and the build stops. (You’ve seen that curl command before.)

Publishing the development orb

To be able to use the orb with a different project, I needed to publish the orb (I could have developed the orb inline to avoid this). The publishing instructions are here. The only issue I ran into was that I had to update my CircleCI organization settings and allow “Uncertified Orbs” before I could create a namespace. After that I was able to publish a development version of my orb:

circleci orb publish .circleci/config.yml mooreds/verifyauth@dev:testing

I was in the directory of my orb code and referenced my config. mooreds is my orb namespace, verifyauth is the orb name (which is arbitrary and not connected to the source repository name in any way) and dev:testing is the version of the orb. Note that there are two types of orb version: production, which strictly follow semantic versioning, and development, prefaced by dev: and after string that can contain “up to 1023 non whitespace characters”. Development orbs have other limitations: they are not public, are mutable and only last for 90 days. You’ll want to publish your orbs with production versions if you are using them for any purpose other than prototyping or exploration.

I published my orb via the command line, but the docs outline publishing via a CircleCI job.

Testing the orb

Now I wanted a second project to test the orb. Here’s the project source code. Here’s the interesting code:

...

orbs:

verifyauth: mooreds/verifyauth@dev:testing

jobs:

build:

steps:

- verifyauth/verifyauth: # when called from external job, command is namespaced to by the name of the orb

username: "circlecimooreds"

applicationid: "98113cee-d1a8-4abf-baf5-a6ea742f80a1"

...

You can see that I pull in the orb at the development version, which I’d previously published. Then I call the namespaced command with some parameters. For this command to work, I also needed to set up required environment variables (in this case, BUILDER_PASS and FUSION_AUTH_API because I didn’t pass in any of the env_var_name parameters). If you don’t set those environment variables (or, alternatively, set the parameters to different values, and then set those environment variables), the build will fail no matter what, as the API call won’t succeed.

I then pushed this sample project up to CircleCI and ran a few builds to make sure the parameters were being picked up.

Publishing the production orb

Now that I had an orb that is parameterized and exposed the command we want to share, I needed to publish it for everyone to use. Note that your configuration code is entirely exposed if you publish an orb. You can see the source of any orb via the circleci orb source command. circleci orb source mooreds/verifyauth@0.0.2 will show you the entire source of my sample orb. They warn you a number of times about this.

To promote to production an orb that you have published to development, update the dev version: circleci orb publish .circleci/config.yml mooreds/verifyauth@dev:testing to catch any changes and then promote it: circleci orb publish promote mooreds/verifyauth@dev:testing patch.

Note that the patch argument at the end of the promote command bumps the patch number (0.0.1 -> 0.0.2) but you can also bump the minor and major numbers. Any changes you make to a production orb require you to publish and promote it again; production orb versions are immutable. For instance, I wanted to update the description of some parameters, but had to publish an entirely new version.

After publishing, you’d want to update any projects that use the orb to use the production version.

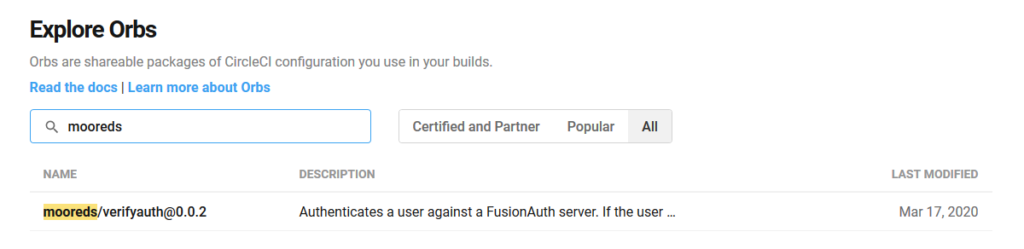

The listing of my published orb

Areas for further work

This was a slightly contrived example. I wanted to gain some experience both with FusionAuth and with CircleCI (I have friends who work at both companies). There are a number of areas where this could be improved:

- authenticate against a different authentication server (LDAP, Okta, AWS IAM)

- store additional information about the user in the authentication database (for instance, which projects they can build) and convert the authentication curl command into an authorization command

- run the identity server over SSL (I just used HTTP because it was easier to get up and running, but that’s obviously a production no-no)

- pull the user and password from the build environment. It’s pretty clear to me how you’d pull the user (there’s a

CIRCLE_USERNAMEenvironment variable) but I’m not sure how to pass the password. I can think of a couple of solutions:- don’t login at all, just allow the API key to pull user data and match on the username (this is probably the best option)

- pass the password via a pipeline parameter, which means you’d have to set up an API call to build

- have one common password for all users in the FusionAuth system, and use it only for access control to the build pipeline

- make the password the same as the username in the FusionAuth system, and use it only for access control to the build pipeline

In conclusion

If you want to interact with external services from within CircleCI, check out the list of existing orbs.

If you have a service that you want to make it easier for CircleCI users to interact with and use, create an orb and publish it.

If you are working with CircleCI and have duplicate configuration that you want to share between projects, setting up your own orbs is a great idea. Orbs are flexible and easy to parameterize. If you’re OK with your configuration being public (it wasn’t clear to me if there was any way to have the configuration kept private), you can encapsulate your build and deploy best practices in an easy to consume manner.

I recently wrote my first real GitHub action workflow at work. It was to publish our website after a merge or push to our main branch.

I recently wrote my first real GitHub action workflow at work. It was to publish our website after a merge or push to our main branch.